The Chilling Concern Elon Musk Had About Google's Deepmind AI

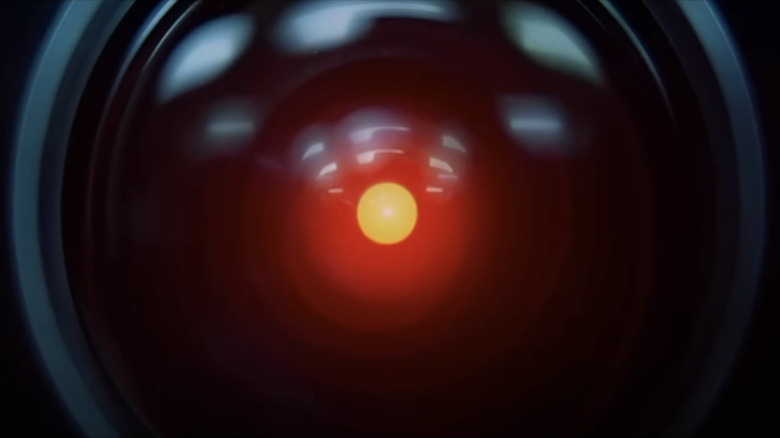

Until recently, popular notions of AI informed by such pop culture artifacts as HAL 9000 from "2001: A Space Odyssey" and Skynet from "The Terminator," envisioned the technology as belonging to some distant future. But in recent years, AI has become a very real presence in the world. That is to say that a very specific type of the technology has become a very real presence: Narrow AI.

This term refers to a form of the technology that is typically designed to do one thing: play chess, create images, write text, and the like. Narrow AI is all around us, and it has been for some time — just think about how long calculators have been a thing. But the arrival of Large Language Models, AI art generators, and AI capable of creating entire songs independently has signaled a new age of Narrow AI — one significant enough to cause widespread consternation. With Hollywood strikes where AI script-writing was a central issue to general anxiety over which jobs will be phased out in favor of this emergent tech, the new age of Narrow AI is already proving to be a potentially revolutionary moment.

But just because narrow AI's influence is growing, that doesn't mean the threat of a self-aware Skynet-like intelligence has disappeared. In fact, many incredibly smart people are extremely worried about the arrival of what is commonly known as Artificial General Intelligence, or AGI — including Elon Musk, who had some very specific and chilling concerns about Google's DeepMind project back when he helped cofound the now ubiquitous OpenAI in 2015.

Elon Musk co-founded OpenAI to guard against an AI dictatorship

To ask whether artificial intelligence is good or bad is to oversimplify the issue. For one thing, there are multiple types of AI, and the kind that can read old scientific papers and make a discovery, is, for example, not all that alarming. To focus solely on AGI, however, opinions differ on whether such a thing will be a net good or net bad for the world, but even then, the issue is much more complex.

AGI is typically thought of as a form of artificial intelligence akin to the human mind— capable of carrying out various tasks and adapting to situations just like our own brains. There are all sorts of considerations that come with developing such an AI, from the so-called alignment problem that focuses on ensuring the technology is aligned with the values of humanity, to the containment problem that focuses on keeping a human-level artificial intelligence under human control.

For Elon Musk and Sam Altman, the real concern was who, exactly, would develop an AGI first and how they would use it. This was a big part of why the pair co-founded OpenAI as a non-profit organization in 2015, hoping to guard against human-level AI coming under the sole control of tech giants such as Google, which in 2014 had acquired AI research company DeepMind. Now, newly released emails have revealed the extent to which Musk and his colleagues were worried about a real AGI dictatorship developing under Google.

Elon Musk sued OpenAI and released internal emails

On its website, DeepMind touts itself as "a team of scientists, engineers, ethicists and more, working to build the next generation of AI systems safely and responsibly." But it seems Elon Musk was unconvinced by such utopian language. He and Sam Altman founded OpenAI with the goal of ensuring no one major tech company, especially Google, would develop AGI first and thereby gain the opportunity to establish control over the technology.

Since then, the OpenAI experiment has changed dramatically, becoming a for-profit company while Musk has left to found his own firm, xAI. The tech entrepreneur has also sued his former company multiple times, filing a lawsuit and various amendments throughout 2024 claiming that OpenAI — the company behind the hugely popular ChatGPT chatbot — has essentially become that which it set out to prevent, or what the filings call a "for-profit, market-paralysing gorgon" (via the BBC). An OpenAI spokesperson told the outlet, "Elon's third attempt in less than a year to reframe his claims is even more baseless and overreaching than the previous ones."

Leaving aside the various claims being made in the lawsuit, one thing to come out of the whole debacle has been the release of numerous emails from around the time of OpenAI's founding, many of which reveal the depth of Musk and his collaborators' fears over an AGI dictatorship.

OpenAI founders wanted to prevent an AGI monopoly

As Transformer reports, in an email from 2016, Elon Musk wrote that DeepMind was "causing [him] extreme mental stress." The entrepreneur worried that if DeepMind were to create an AGI first, that it would spell bad news for everyone else, specifically because of what Musk saw as the company's "one mind to rule the world philosophy." In his view, DeepMind founder Demis Hassabis could easily create an AGI dictatorship by being the first to develop human-level artificial intelligence.

It wasn't just Musk that was so worried about DeepMind and Google's AGI ambitions, either. OpenAI co-founder Sam Altman also sent out a 2015 email in which he wrote revealed that he'd spent a lot of time pondering whether it was possible to stop humanity from developing AI, coming to the conclusion that the answer was "almost definitely not." As such, he shared Musk's view that the first people to develop an AGI should not be a massive coorporation such as Google, writing, "If it's going to happen anyway, it seems like it would be good for someone other than Google to do it first."

Elsewhere, two other OpenAI co-founders Ilya Sutskever and Trevor Blackwell expressed their concern about DeepMind developing AGI. In an email, the pair described OpenAI as a project geared towards avoiding an AGI dictatorship. "You are concerned that [DeepMind CEO Demis Hassabis] could create an AGI dictatorship," they wrote, "So do we" (via The Verge).

What's all the AGI anxiety about?

It's impossible to say what the development of true Artificial General Intelligence will look like or how it will affect the world. But there are some very smart people who are very worried about it. Nick Bostrom — author, Oxford professor, and former head of the university's Future of Humanity Institute — has been one of the most vocal on the topic, most famously with his 2016 book "Superintelligence." In the book, Bostrom writes, "Before the prospect of an intelligence explosion, we humans are like small children playing with a bomb." As the professor more recently told Wired, "I think it would be desirable that whoever is at the forefront of developing the next generation AI systems, particularly the truly transformative superintelligent systems, would have the ability to pause during key stages. That would be useful for safety."

It's unclear whether DeepMind, or any large tech company developing AI, will have these sort of safeguards in place, despite their claims to be working "safely." That said, there are plenty of informed people who maintain that so-called AI doomerism is simply misguided and that there's nothing to worry about.

What is clear, however, is that while Google DeepMind continues to make it easier to identify AI text and help watermark the products of today's narrow AI, there is a bigger picture to all of this. That picture isn't entirely clear, but AGI is at the center, and it isn't just Elon Musk that's worried about such a thing.